Player FM - Internet Radio Done Right

12 subscribers

Checked 3d ago

Toegevoegd drie jaar geleden

Inhoud geleverd door LessWrong. Alle podcastinhoud, inclusief afleveringen, afbeeldingen en podcastbeschrijvingen, wordt rechtstreeks geüpload en geleverd door LessWrong of hun podcastplatformpartner. Als u denkt dat iemand uw auteursrechtelijk beschermde werk zonder uw toestemming gebruikt, kunt u het hier beschreven proces https://nl.player.fm/legal volgen.

Player FM - Podcast-app

Ga offline met de app Player FM !

Ga offline met de app Player FM !

Podcasts die het beluisteren waard zijn

GESPONSORDE

Episode web page: https://tinyurl.com/2b3dz2z8 ----------------------- Rate Insights Unlocked and write a review If you appreciate Insights Unlocked , please give it a rating and a review. Visit Apple Podcasts, pull up the Insights Unlocked show page and scroll to the bottom of the screen. Below the trailers, you'll find Ratings and Reviews. Click on a star rating. Scroll down past the highlighted review and click on "Write a Review." You'll make my day. ----------------------- In this episode of Insights Unlocked , we explore the evolving landscape of omnichannel strategies with Kate MacCabe, founder of Flywheel Strategy. With nearly two decades of experience in digital strategy and product management, Kate shares her insights on bridging internal silos, leveraging customer insights, and designing omnichannel experiences that truly resonate. From the early days of DTC growth to today’s complex, multi-touchpoint customer journeys, Kate explains why omnichannel is no longer optional—it’s essential. She highlights a standout example from Anthropologie, demonstrating how brands can create a unified customer experience across digital and physical spaces. Whether you’re a marketing leader, UX strategist, or product manager, this episode is packed with actionable advice on aligning teams, integrating user feedback, and building a future-proof omnichannel strategy. Key Takeaways: ✅ Omnichannel vs. Multichannel: Many brands think they’re omnichannel, but they’re really just multichannel. Kate breaks down the difference and how to shift toward true integration. ✅ Anthropologie’s Success Story: Learn how this brand seamlessly blended physical and digital experiences to create a memorable, data-driven customer journey. ✅ User Feedback is the Secret Weapon: Discover how continuous user testing—before, during, and after a launch—helps brands fine-tune their strategies and avoid costly mistakes. ✅ Aligning Teams for Success: Cross-functional collaboration is critical. Kate shares tips on breaking down silos between marketing, product, and development teams. ✅ Emerging Tech & Omnichannel: Instead of chasing the latest tech trends, Kate advises businesses to define their strategic goals first—then leverage AI, AR, and other innovations to enhance the customer experience. Quotes from the Episode: 💬 "Omnichannel isn’t just about being everywhere; it’s about creating seamless bridges between every touchpoint a customer interacts with." – Kate MacCabe 💬 "Companies that truly listen to their users—through qualitative and quantitative insights—are the ones that thrive in today’s competitive landscape." – Kate MacCabe Resources & Links: 🔗 Learn more about Flywheel Strategy 🔗 Connect with Kate MacCabe on LinkedIn 🔗 Explore UserTesting for customer insights for marketers…

“My theory of change for working in AI healthtech” by Andrew_Critch

Manage episode 445268266 series 3364758

Inhoud geleverd door LessWrong. Alle podcastinhoud, inclusief afleveringen, afbeeldingen en podcastbeschrijvingen, wordt rechtstreeks geüpload en geleverd door LessWrong of hun podcastplatformpartner. Als u denkt dat iemand uw auteursrechtelijk beschermde werk zonder uw toestemming gebruikt, kunt u het hier beschreven proces https://nl.player.fm/legal volgen.

This post starts out pretty gloomy but ends up with some points that I feel pretty positive about. Day to day, I'm more focussed on the positive points, but awareness of the negative has been crucial to forming my priorities, so I'm going to start with those. It's mostly addressed to the EA community, but is hopefully somewhat of interest to LessWrong and the Alignment Forum as well.

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

…

continue reading

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

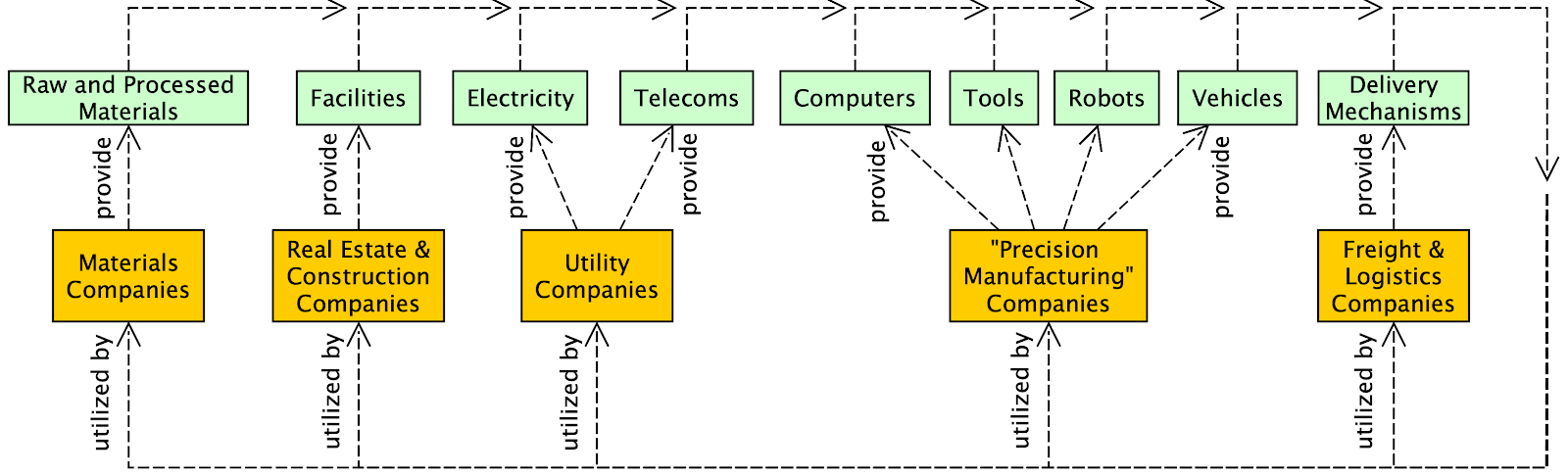

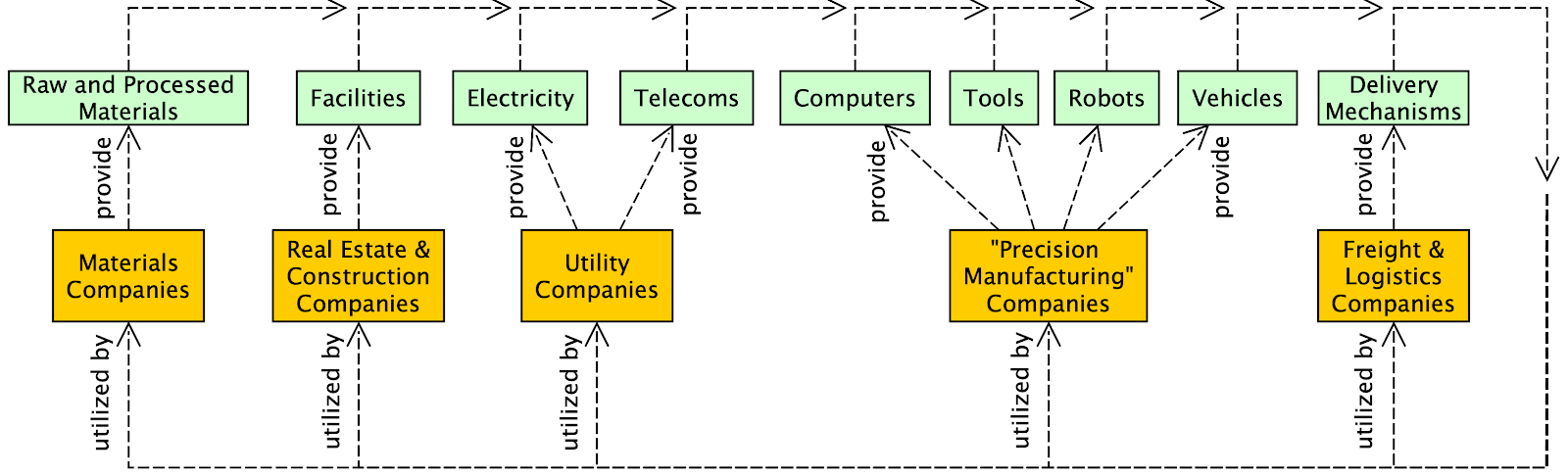

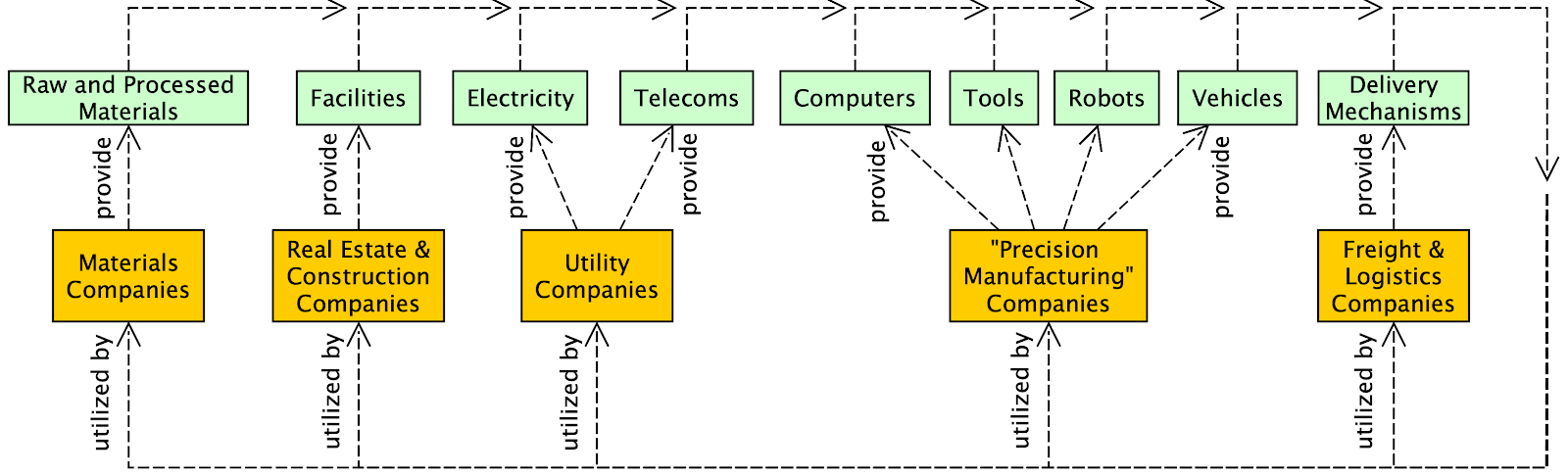

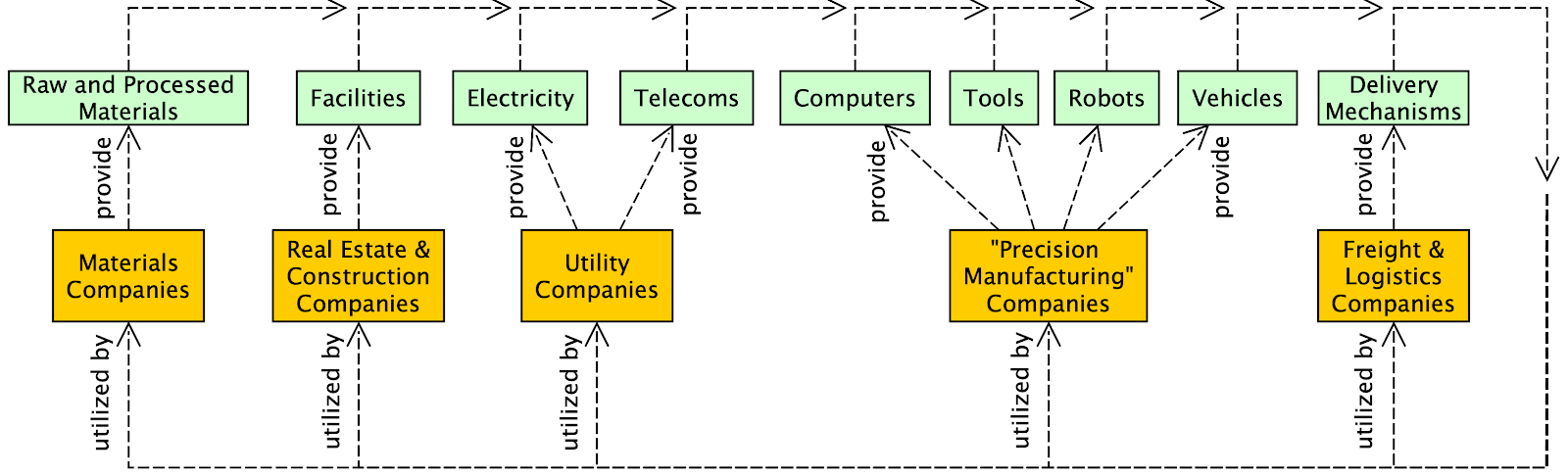

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.490 afleveringen

Manage episode 445268266 series 3364758

Inhoud geleverd door LessWrong. Alle podcastinhoud, inclusief afleveringen, afbeeldingen en podcastbeschrijvingen, wordt rechtstreeks geüpload en geleverd door LessWrong of hun podcastplatformpartner. Als u denkt dat iemand uw auteursrechtelijk beschermde werk zonder uw toestemming gebruikt, kunt u het hier beschreven proces https://nl.player.fm/legal volgen.

This post starts out pretty gloomy but ends up with some points that I feel pretty positive about. Day to day, I'm more focussed on the positive points, but awareness of the negative has been crucial to forming my priorities, so I'm going to start with those. It's mostly addressed to the EA community, but is hopefully somewhat of interest to LessWrong and the Alignment Forum as well.

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

…

continue reading

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.490 afleveringen

Alle afleveringen

דIn the loveliest town of all, where the houses were white and high and the elms trees were green and higher than the houses, where the front yards were wide and pleasant and the back yards were bushy and worth finding out about, where the streets sloped down to the stream and the stream flowed quietly under the bridge, where the lawns ended in orchards and the orchards ended in fields and the fields ended in pastures and the pastures climbed the hill and disappeared over the top toward the wonderful wide sky, in this loveliest of all towns Stuart stopped to get a drink of sarsaparilla.” — 107-word sentence from Stuart Little (1945) Sentence lengths have declined. The average sentence length was 49 for Chaucer (died 1400), 50 for Spenser (died 1599), 42 for Austen (died 1817), 20 for Dickens (died 1870), 21 for Emerson (died 1882), 14 [...] --- First published: April 3rd, 2025 Source: https://www.lesswrong.com/posts/xYn3CKir4bTMzY5eb/why-have-sentence-lengths-decreased --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

1 “AI 2027: What Superintelligence Looks Like” by Daniel Kokotajlo, Thomas Larsen, elifland, Scott Alexander, Jonas V, romeo 54:30

In 2021 I wrote what became my most popular blog post: What 2026 Looks Like. I intended to keep writing predictions all the way to AGI and beyond, but chickened out and just published up till 2026. Well, it's finally time. I'm back, and this time I have a team with me: the AI Futures Project. We've written a concrete scenario of what we think the future of AI will look like. We are highly uncertain, of course, but we hope this story will rhyme with reality enough to help us all prepare for what's ahead. You really should go read it on the website instead of here, it's much better. There's a sliding dashboard that updates the stats as you scroll through the scenario! But I've nevertheless copied the first half of the story below. I look forward to reading your comments. Mid 2025: Stumbling Agents The [...] --- Outline: (01:35) Mid 2025: Stumbling Agents (03:13) Late 2025: The World's Most Expensive AI (08:34) Early 2026: Coding Automation (10:49) Mid 2026: China Wakes Up (13:48) Late 2026: AI Takes Some Jobs (15:35) January 2027: Agent-2 Never Finishes Learning (18:20) February 2027: China Steals Agent-2 (21:12) March 2027: Algorithmic Breakthroughs (23:58) April 2027: Alignment for Agent-3 (27:26) May 2027: National Security (29:50) June 2027: Self-improving AI (31:36) July 2027: The Cheap Remote Worker (34:35) August 2027: The Geopolitics of Superintelligence (40:43) September 2027: Agent-4, the Superhuman AI Researcher --- First published: April 3rd, 2025 Source: https://www.lesswrong.com/posts/TpSFoqoG2M5MAAesg/ai-2027-what-superintelligence-looks-like-1 --- Narrated by TYPE III AUDIO . --- Images from the article:…

Back when the OpenAI board attempted and failed to fire Sam Altman, we faced a highly hostile information environment. The battle was fought largely through control of the public narrative, and the above was my attempt to put together what happened.My conclusion, which I still believe, was that Sam Altman had engaged in a variety of unacceptable conduct that merited his firing.In particular, he very much ‘not been consistently candid’ with the board on several important occasions. In particular, he lied to board members about what was said by other board members, with the goal of forcing out a board member he disliked. There were also other instances in which he misled and was otherwise toxic to employees, and he played fast and loose with the investment fund and other outside opportunities. I concluded that the story that this was about ‘AI safety’ or ‘EA (effective altruism)’ or [...] --- Outline: (01:32) The Big Picture Going Forward (06:27) Hagey Verifies Out the Story (08:50) Key Facts From the Story (11:57) Dangers of False Narratives (16:24) A Full Reference and Reading List --- First published: March 31st, 2025 Source: https://www.lesswrong.com/posts/25EgRNWcY6PM3fWZh/openai-12-battle-of-the-board-redux --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

Epistemic status: This post aims at an ambitious target: improving intuitive understanding directly. The model for why this is worth trying is that I believe we are more bottlenecked by people having good intuitions guiding their research than, for example, by the ability of people to code and run evals. Quite a few ideas in AI safety implicitly use assumptions about individuality that ultimately derive from human experience. When we talk about AIs scheming, alignment faking or goal preservation, we imply there is something scheming or alignment faking or wanting to preserve its goals or escape the datacentre. If the system in question were human, it would be quite clear what that individual system is. When you read about Reinhold Messner reaching the summit of Everest, you would be curious about the climb, but you would not ask if it was his body there, or his [...] --- Outline: (01:38) Individuality in Biology (03:53) Individuality in AI Systems (10:19) Risks and Limitations of Anthropomorphic Individuality Assumptions (11:25) Coordinating Selves (16:19) Whats at Stake: Stories (17:25) Exporting Myself (21:43) The Alignment Whisperers (23:27) Echoes in the Dataset (25:18) Implications for Alignment Research and Policy --- First published: March 28th, 2025 Source: https://www.lesswrong.com/posts/wQKskToGofs4osdJ3/the-pando-problem-rethinking-ai-individuality --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

Back when the OpenAI board attempted and failed to fire Sam Altman, we faced a highly hostile information environment. The battle was fought largely through control of the public narrative, and the above was my attempt to put together what happened.My conclusion, which I still believe, was that Sam Altman had engaged in a variety of unacceptable conduct that merited his firing.In particular, he very much ‘not been consistently candid’ with the board on several important occasions. In particular, he lied to board members about what was said by other board members, with the goal of forcing out a board member he disliked. There were also other instances in which he misled and was otherwise toxic to employees, and he played fast and loose with the investment fund and other outside opportunities. I concluded that the story that this was about ‘AI safety’ or ‘EA (effective altruism)’ or [...] --- Outline: (01:32) The Big Picture Going Forward (06:27) Hagey Verifies Out the Story (08:50) Key Facts From the Story (11:57) Dangers of False Narratives (16:24) A Full Reference and Reading List --- First published: March 31st, 2025 Source: https://www.lesswrong.com/posts/25EgRNWcY6PM3fWZh/openai-12-battle-of-the-board-redux --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

I'm not writing this to alarm anyone, but it would be irresponsible not to report on something this important. On current trends, every car will be crashed in front of my house within the next week. Here's the data: Until today, only two cars had crashed in front of my house, several months apart, during the 15 months I have lived here. But a few hours ago it happened again, mere weeks from the previous crash. This graph may look harmless enough, but now consider the frequency of crashes this implies over time: The car crash singularity will occur in the early morning hours of Monday, April 7. As crash frequency approaches infinity, every car will be involved. You might be thinking that the same car could be involved in multiple crashes. This is true! But the same car can only withstand a finite number of crashes before it [...] --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/FjPWbLdoP4PLDivYT/you-will-crash-your-car-in-front-of-my-house-within-the-next --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

1 “My ‘infohazards small working group’ Signal Chat may have encountered minor leaks” by Linch 10:33

Remember: There is no such thing as a pink elephant. Recently, I was made aware that my “infohazards small working group” Signal chat, an informal coordination venue where we have frank discussions about infohazards and why it will be bad if specific hazards were leaked to the press or public, accidentally was shared with a deceitful and discredited so-called “journalist,” Kelsey Piper. She is not the first person to have been accidentally sent sensitive material from our group chat, however she is the first to have threatened to go public about the leak. Needless to say, mistakes were made. We’re still trying to figure out the source of this compromise to our secure chat group, however we thought we should give the public a live update to get ahead of the story. For some context the “infohazards small working group” is a casual discussion venue for the [...] --- Outline: (04:46) Top 10 PR Issues With the EA Movement (major) (05:34) Accidental Filtration of Simple Sabotage Manual for Rebellious AIs (medium) (08:25) Hidden Capabilities Evals Leaked In Advance to Bioterrorism Researchers and Leaders (minor) (09:34) Conclusion --- First published: April 2nd, 2025 Source: https://www.lesswrong.com/posts/xPEfrtK2jfQdbpq97/my-infohazards-small-working-group-signal-chat-may-have --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

1 “Leverage, Exit Costs, and Anger: Re-examining Why We Explode at Home, Not at Work” by at_the_zoo 6:16

Let's cut through the comforting narratives and examine a common behavioral pattern with a sharper lens: the stark difference between how anger is managed in professional settings versus domestic ones. Many individuals can navigate challenging workplace interactions with remarkable restraint, only to unleash significant anger or frustration at home shortly after. Why does this disparity exist? Common psychological explanations trot out concepts like "stress spillover," "ego depletion," or the home being a "safe space" for authentic emotions. While these factors might play a role, they feel like half-truths—neatly packaged but ultimately failing to explain the targeted nature and intensity of anger displayed at home. This analysis proposes a more unsentimental approach, rooted in evolutionary biology, game theory, and behavioral science: leverage and exit costs. The real question isn’t just why we explode at home—it's why we so carefully avoid doing so elsewhere. The Logic of Restraint: Low Leverage in [...] --- Outline: (01:14) The Logic of Restraint: Low Leverage in Low-Exit-Cost Environments (01:58) The Home Environment: High Stakes and High Exit Costs (02:41) Re-evaluating Common Explanations Through the Lens of Leverage (04:42) The Overlooked Mechanism: Leveraging Relational Constraints --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/G6PTtsfBpnehqdEgp/leverage-exit-costs-and-anger-re-examining-why-we-explode-at --- Narrated by TYPE III AUDIO .…

In the debate over AI development, two movements stand as opposites: PauseAI calls for slowing down AI progress, and e/acc (effective accelerationism) calls for rapid advancement. But what if both sides are working against their own stated interests? What if the most rational strategy for each would be to adopt the other's tactics—if not their ultimate goals? AI development speed ultimately comes down to policy decisions, which are themselves downstream of public opinion. No matter how compelling technical arguments might be on either side, widespread sentiment will determine what regulations are politically viable. Public opinion is most powerfully mobilized against technologies following visible disasters. Consider nuclear power: despite being statistically safer than fossil fuels, its development has been stagnant for decades. Why? Not because of environmental activists, but because of Chernobyl, Three Mile Island, and Fukushima. These disasters produce visceral public reactions that statistics cannot overcome. Just as people [...] --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/fZebqiuZcDfLCgizz/pauseai-and-e-acc-should-switch-sides --- Narrated by TYPE III AUDIO .…

Introduction Decision theory is about how to behave rationally under conditions of uncertainty, especially if this uncertainty involves being acausally blackmailed and/or gaslit by alien superintelligent basilisks. Decision theory has found numerous practical applications, including proving the existence of God and generating endless LessWrong comments since the beginning of time. However, despite the apparent simplicity of "just choose the best action", no comprehensive decision theory that resolves all decision theory dilemmas has yet been formalized. This paper at long last resolves this dilemma, by introducing a new decision theory: VDT. Decision theory problems and existing theories Some common existing decision theories are: Causal Decision Theory (CDT): select the action that *causes* the best outcome. Evidential Decision Theory (EDT): select the action that you would be happiest to learn that you had taken. Functional Decision Theory (FDT): select the action output by the function such that if you take [...] --- Outline: (00:53) Decision theory problems and existing theories (05:37) Defining VDT (06:34) Experimental results (07:48) Conclusion --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/LcjuHNxubQqCry9tT/vdt-a-solution-to-decision-theory --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

Dear LessWrong community, It is with a sense of... considerable cognitive dissonance that I announce a significant development regarding the future trajectory of LessWrong. After extensive internal deliberation, modeling of potential futures, projections of financial runways, and what I can only describe as a series of profoundly unexpected coordination challenges, the Lightcone Infrastructure team has agreed in principle to the acquisition of LessWrong by EA. I assure you, nothing about how LessWrong operates on a day to day level will change. I have always cared deeply about the robustness and integrity of our institutions, and I am fully aligned with our stakeholders at EA. To be honest, the key thing that EA brings to the table is money and talent. While the recent layoffs in EAs broader industry have been harsh, I have full trust in the leadership of Electronic Arts, and expect them to bring great expertise [...] --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/2NGKYt3xdQHwyfGbc/lesswrong-has-been-acquired-by-ea --- Narrated by TYPE III AUDIO .…

Our community is not prepared for an AI crash. We're good at tracking new capability developments, but not as much the company financials. Currently, both OpenAI and Anthropic are losing $5 billion+ a year, while under threat of losing users to cheap LLMs. A crash will weaken the labs. Funding-deprived and distracted, execs struggle to counter coordinated efforts to restrict their reckless actions. Journalists turn on tech darlings. Optimism makes way for mass outrage, for all the wasted money and reckless harms. You may not think a crash is likely. But if it happens, we can turn the tide. Preparing for a crash is our best bet.[1] But our community is poorly positioned to respond. Core people positioned themselves inside institutions – to advise on how to maybe make AI 'safe', under the assumption that models rapidly become generally useful. After a crash, this no longer works, for at [...] --- First published: April 1st, 2025 Source: https://www.lesswrong.com/posts/aMYFHnCkY4nKDEqfK/we-re-not-prepared-for-an-ai-market-crash --- Narrated by TYPE III AUDIO .…

Epistemic status: Reasonably confident in the basic mechanism. Have you noticed that you keep encountering the same ideas over and over? You read another post, and someone helpfully points out it's just old Paul's idea again. Or Eliezer's idea. Not much progress here, move along. Or perhaps you've been on the other side: excitedly telling a friend about some fascinating new insight, only to hear back, "Ah, that's just another version of X." And something feels not quite right about that response, but you can't quite put your finger on it. I want to propose that while ideas are sometimes genuinely that repetitive, there's often a sneakier mechanism at play. I call it Conceptual Rounding Errors – when our mind's necessary compression goes a bit too far . Too much compression A Conceptual Rounding Error occurs when we encounter a new mental model or idea that's partially—but not fully—overlapping [...] --- Outline: (01:00) Too much compression (01:24) No, This Isnt The Old Demons Story Again (02:52) The Compression Trade-off (03:37) More of this (04:15) What Can We Do? (05:28) When It Matters --- First published: March 26th, 2025 Source: https://www.lesswrong.com/posts/FGHKwEGKCfDzcxZuj/conceptual-rounding-errors --- Narrated by TYPE III AUDIO .…

[This is our blog post on the papers, which can be found at https://transformer-circuits.pub/2025/attribution-graphs/biology.html and https://transformer-circuits.pub/2025/attribution-graphs/methods.html.] Language models like Claude aren't programmed directly by humans—instead, they‘re trained on large amounts of data. During that training process, they learn their own strategies to solve problems. These strategies are encoded in the billions of computations a model performs for every word it writes. They arrive inscrutable to us, the model's developers. This means that we don’t understand how models do most of the things they do. Knowing how models like Claude think would allow us to have a better understanding of their abilities, as well as help us ensure that they’re doing what we intend them to. For example: Claude can speak dozens of languages. What language, if any, is it using "in its head"? Claude writes text one word at a time. Is it only focusing on predicting the [...] --- Outline: (06:02) How is Claude multilingual? (07:43) Does Claude plan its rhymes? (09:58) Mental Math (12:04) Are Claude's explanations always faithful? (15:27) Multi-step Reasoning (17:09) Hallucinations (19:36) Jailbreaks --- First published: March 27th, 2025 Source: https://www.lesswrong.com/posts/zsr4rWRASxwmgXfmq/tracing-the-thoughts-of-a-large-language-model --- Narrated by TYPE III AUDIO . --- Images from the article:…

About nine months ago, I and three friends decided that AI had gotten good enough to monitor large codebases autonomously for security problems. We started a company around this, trying to leverage the latest AI models to create a tool that could replace at least a good chunk of the value of human pentesters. We have been working on this project since since June 2024. Within the first three months of our company's existence, Claude 3.5 sonnet was released. Just by switching the portions of our service that ran on gpt-4o, our nascent internal benchmark results immediately started to get saturated. I remember being surprised at the time that our tooling not only seemed to make fewer basic mistakes, but also seemed to qualitatively improve in its written vulnerability descriptions and severity estimates. It was as if the models were better at inferring the intent and values behind our [...] --- Outline: (04:44) Are the AI labs just cheating? (07:22) Are the benchmarks not tracking usefulness? (10:28) Are the models smart, but bottlenecked on alignment? --- First published: March 24th, 2025 Source: https://www.lesswrong.com/posts/4mvphwx5pdsZLMmpY/recent-ai-model-progress-feels-mostly-like-bullshit --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

Welkom op Player FM!

Player FM scant het web op podcasts van hoge kwaliteit waarvan u nu kunt genieten. Het is de beste podcast-app en werkt op Android, iPhone en internet. Aanmelden om abonnementen op verschillende apparaten te synchroniseren.