ICLR 2024 — Best Papers & Talks (ImageGen, Vision, Transformers, State Space Models) ft. Durk Kingma, Christian Szegedy, Ilya Sutskever

Manage episode 420569297 series 3451473

Speakers for AI Engineer World’s Fair have been announced! See our Microsoft episode for more info and buy now with code LATENTSPACE1 — we’ve been studying the best ML research conferences so we can make the best AI industry conf!

Note that this year there are 4 main tracks per day and dozens of workshops/expo sessions; the free livestream will air much less than half of the content this time.

Apply for free/discounted Diversity Program and Scholarship tickets here. We hope to make this the definitive technical conference for ALL AI engineers.

UPDATE: This is a 2 part episode - see Part 2 here.

ICLR 2024 took place from May 6-11 in Vienna, Austria.

Just like we did for our extremely popular NeurIPS 2023 coverage, we decided to pay the $900 ticket (thanks to all of you paying supporters!) and brave the 18 hour flight and 5 day grind to go on behalf of all of you. We now present the results of that work!

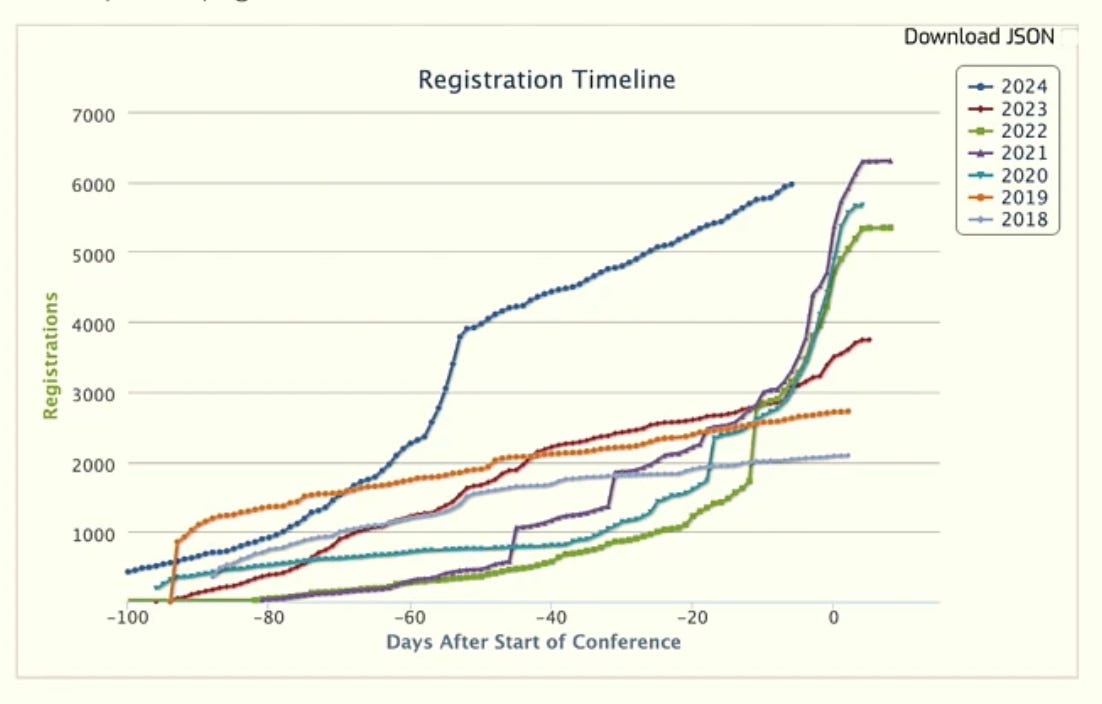

This ICLR was the biggest one by far, with a marked change in the excitement trajectory for the conference:

Of the 2260 accepted papers (31% acceptance rate), of the subset of those relevant to our shortlist of AI Engineering Topics, we found many, many LLM reasoning and agent related papers, which we will cover in the next episode. We will spend this episode with 14 papers covering other relevant ICLR topics, as below.

As we did last year, we’ll start with the Best Paper Awards. Unlike last year, we now group our paper selections by subjective topic area, and mix in both Outstanding Paper talks as well as editorially selected poster sessions. Where we were able to do a poster session interview, please scroll to the relevant show notes for images of their poster for discussion. To cap things off, Chris Ré’s spot from last year now goes to Sasha Rush for the obligatory last word on the development and applications of State Space Models.

We had a blast at ICLR 2024 and you can bet that we’ll be back in 2025 🇸🇬.

Timestamps and Overview of Papers

[00:02:49] Section A: ImageGen, Compression, Adversarial Attacks

[00:02:49] VAEs

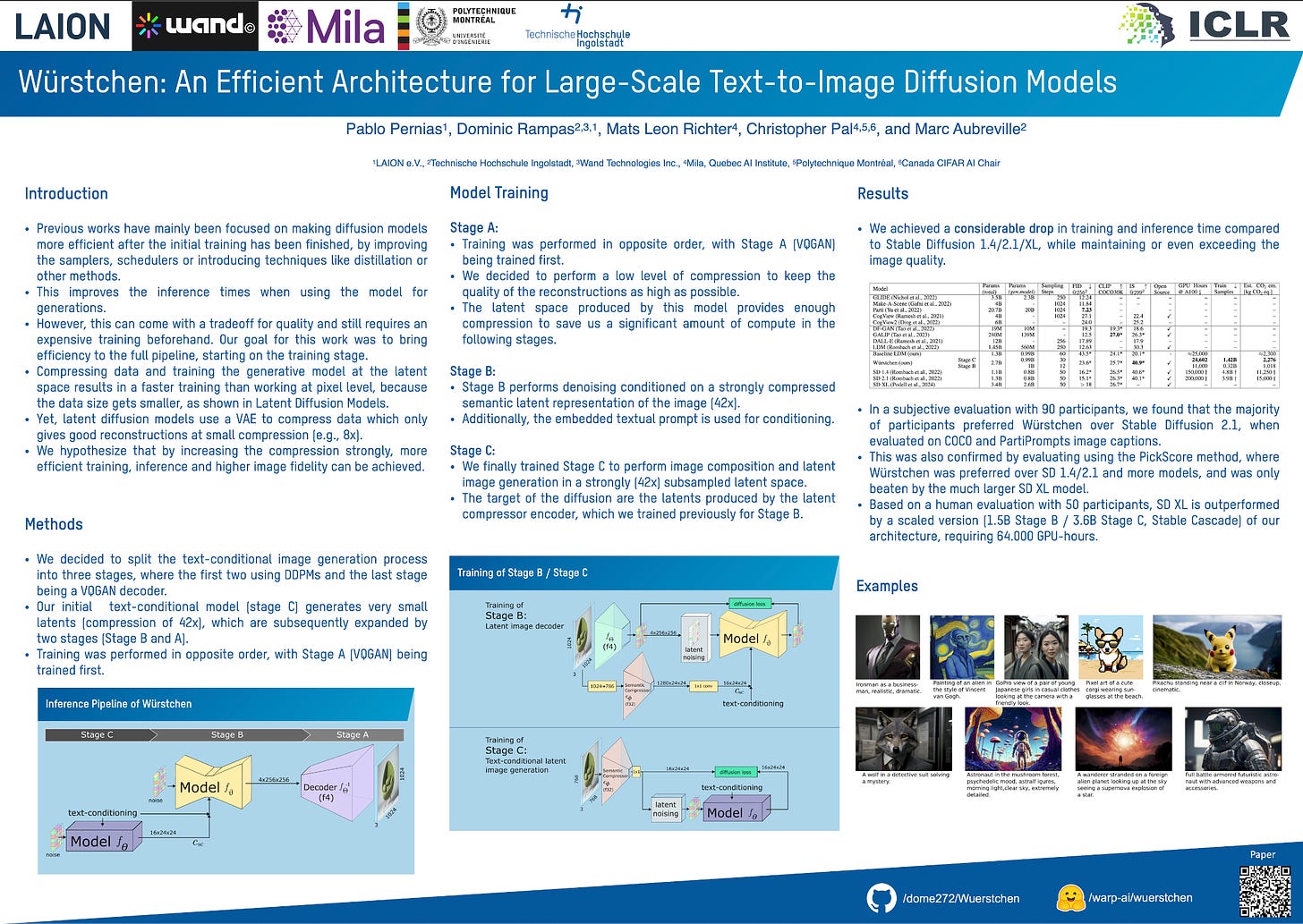

[00:32:36] Würstchen: An Efficient Architecture for Large-Scale Text-to-Image Diffusion Models

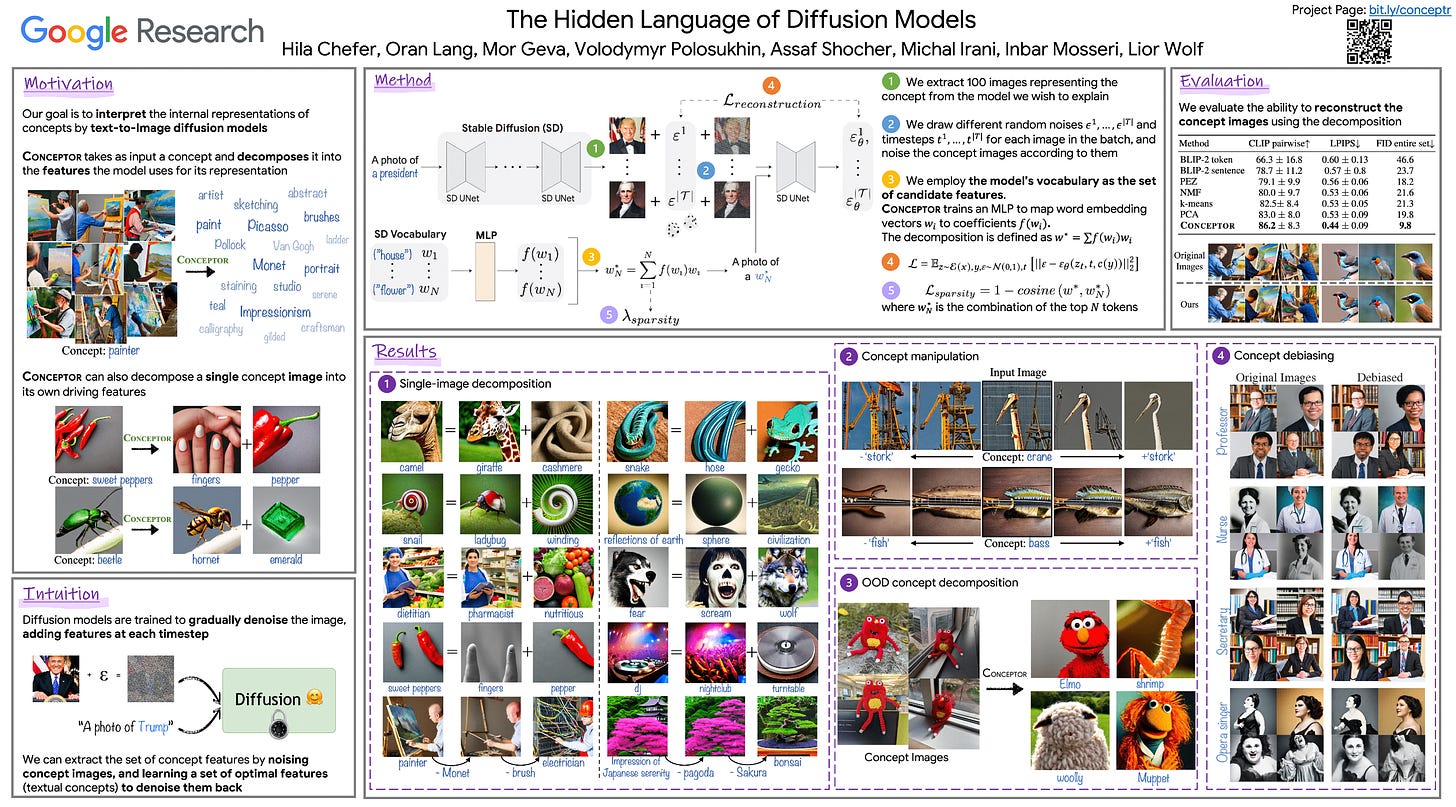

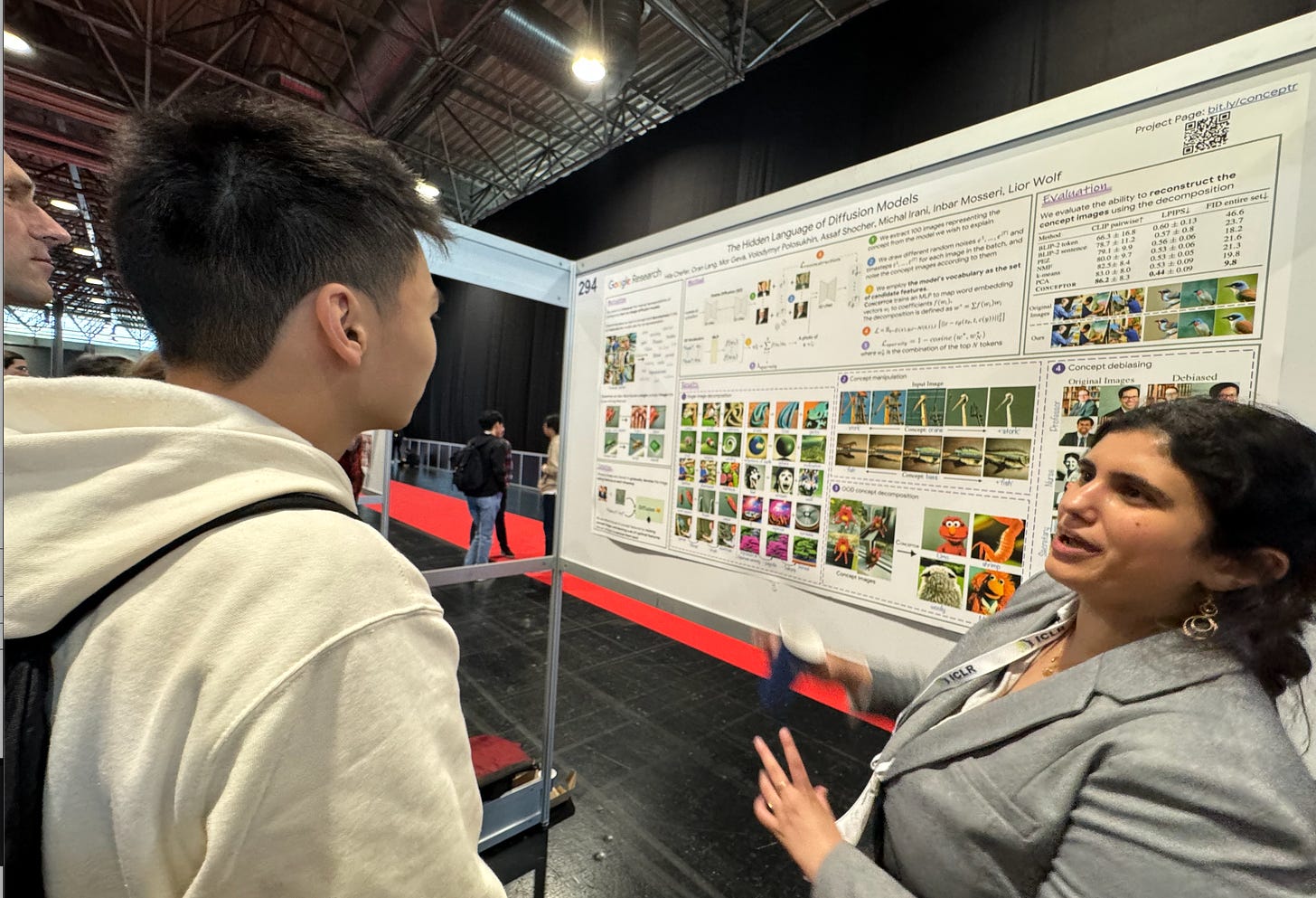

[00:37:25] The Hidden Language Of Diffusion Models

[00:48:40] Ilya on Compression

[01:01:45] Christian Szegedy on Compression

[01:07:34] Intriguing properties of neural networks

[01:26:07] Section B: Vision Learning and Weak Supervision

[01:26:45] Vision Transformers Need Registers

[01:38:27] Think before you speak: Training Language Models With Pause Tokens

[01:47:06] Towards a statistical theory of data selection under weak supervision

[02:00:32] Is ImageNet worth 1 video?

[02:06:32] Section C: Extending Transformers and Attention

[02:06:49] LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models

[02:15:12] YaRN: Efficient Context Window Extension of Large Language Models

[02:32:02] Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs

[02:44:57] ZeRO++: Extremely Efficient Collective Communication for Giant Model Training

[02:54:26] Section D: State Space Models vs Transformers

[03:31:15] Never Train from Scratch: Fair Comparison of Long-Sequence Models Requires Data-Driven Priors

[03:37:08] End of Part 1

A: ImageGen, Compression, Adversarial Attacks

Durk Kingma (OpenAI/Google DeepMind) & Max Welling: Auto-Encoding Variational Bayes (Full ICLR talk)

Preliminary resources: Understanding VAEs, CodeEmporium, Arxiv Insights

Inaugural ICLR Test of Time Award! “Probabilistic modeling is one of the most fundamental ways in which we reason about the world. This paper spearheaded the integration of deep learning with scalable probabilistic inference (amortized mean-field variational inference via a so-called reparameterization trick), giving rise to the Variational Autoencoder (VAE).”

Pablo Pernías (Stability) et al: Würstchen: An Efficient Architecture for Large-Scale Text-to-Image Diffusion Models (ICLR oral, poster)

Hila Chefer et al (Google Research): Hidden Language Of Diffusion Models (poster)

See also: Google Lumiere, Attend and Excite

Christian Szegedy (X.ai): Intriguing properties of neural networks (Full ICLR talk)

Inaugural Test of Time Award runner up: “With the rising popularity of deep neural networks in real applications, it is important to understand when and how neural networks might behave in undesirable ways. This paper highlighted the issue that neural networks can be vulnerable to small almost imperceptible variations to the input. This idea helped spawn the area of adversarial attacks (trying to fool a neural network) as well as adversarial defense (training a neural network to not be fooled). “

with Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, Rob Fergus

B: Vision Learning and Weak Supervision

Timothée Darcet (Meta) et al : Vision Transformers Need Registers (ICLR oral, Paper)

ICLR Outstanding Paper Award: “This paper identifies artifacts in feature maps of vision transformer networks, characterized by high-norm tokens in low-informative background areas. The authors provide key hypotheses for why this is happening and provide a simple yet elegant solution to address these artifacts using additional register tokens, enhancing model performance on various tasks. The insights gained from this work can also impact other application areas. The paper is very well-written and provides a great example of conducting research – identifying an issue, understanding why it is happening, and then providing a solution.“

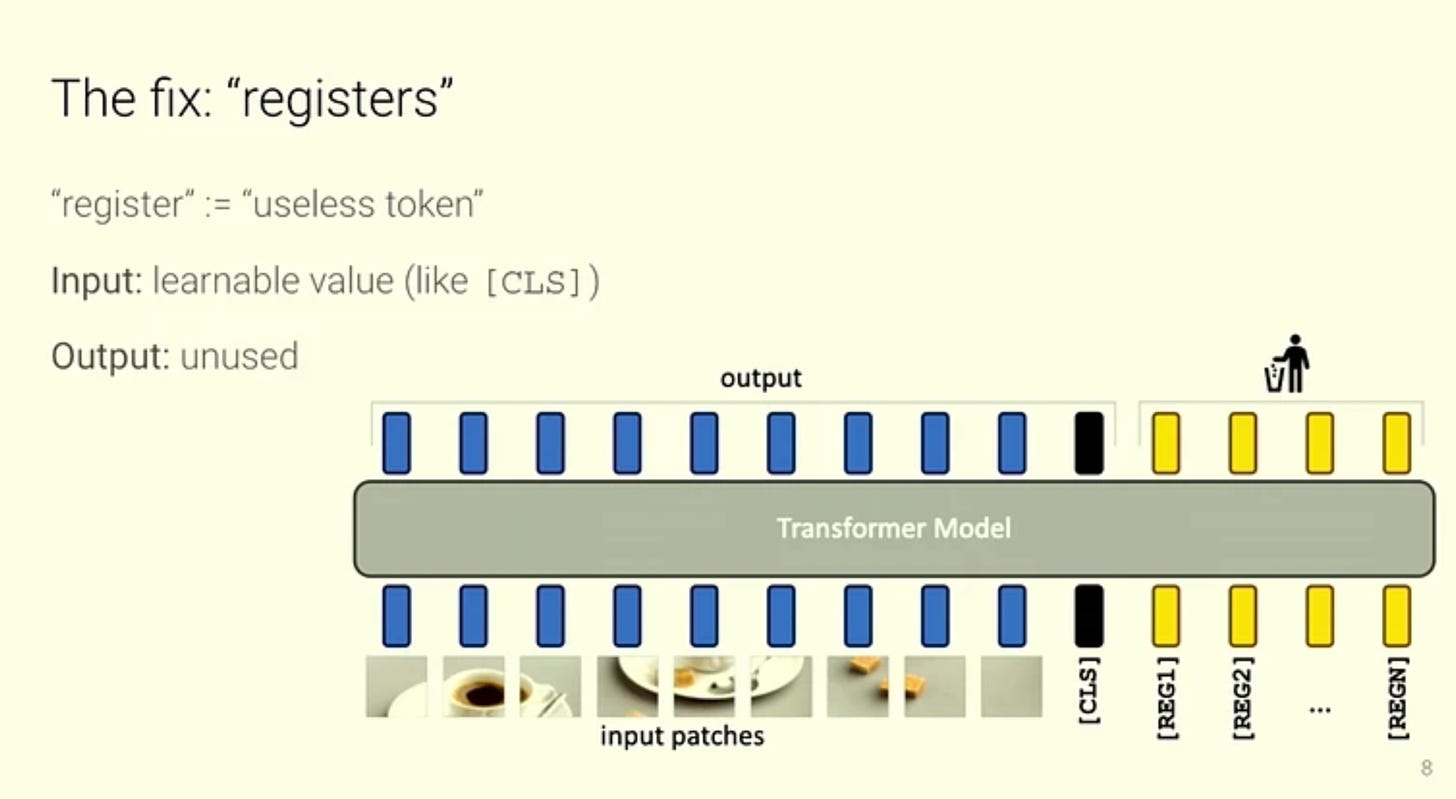

HN discussion: “According to the paper, the "registers" are additional learnable tokens that are appended to the input sequence of a Vision Transformer model during training. They are added after the patch embedding layer, with a learnable value, similar to the [CLS] token and then at the end of the Vision Transformer, the register tokens are discarded, and only the [CLS] token and patch tokens are used as image representations.

The register tokens provide a place for the model to store, process and retrieve global information during the forward pass, without repurposing patch tokens for this role.

Adding register tokens removes the artifacts and high-norm "outlier" tokens that otherwise appear in the feature maps of trained Vision Transformer models. Using register tokens leads to smoother feature maps, improved performance on dense prediction tasks, and enables better unsupervised object discovery compared to the same models trained without the additional register tokens. This is a neat result. For just a 2% increase in inference cost, you can significantly improve ViT model performance. Close to a free lunch.”

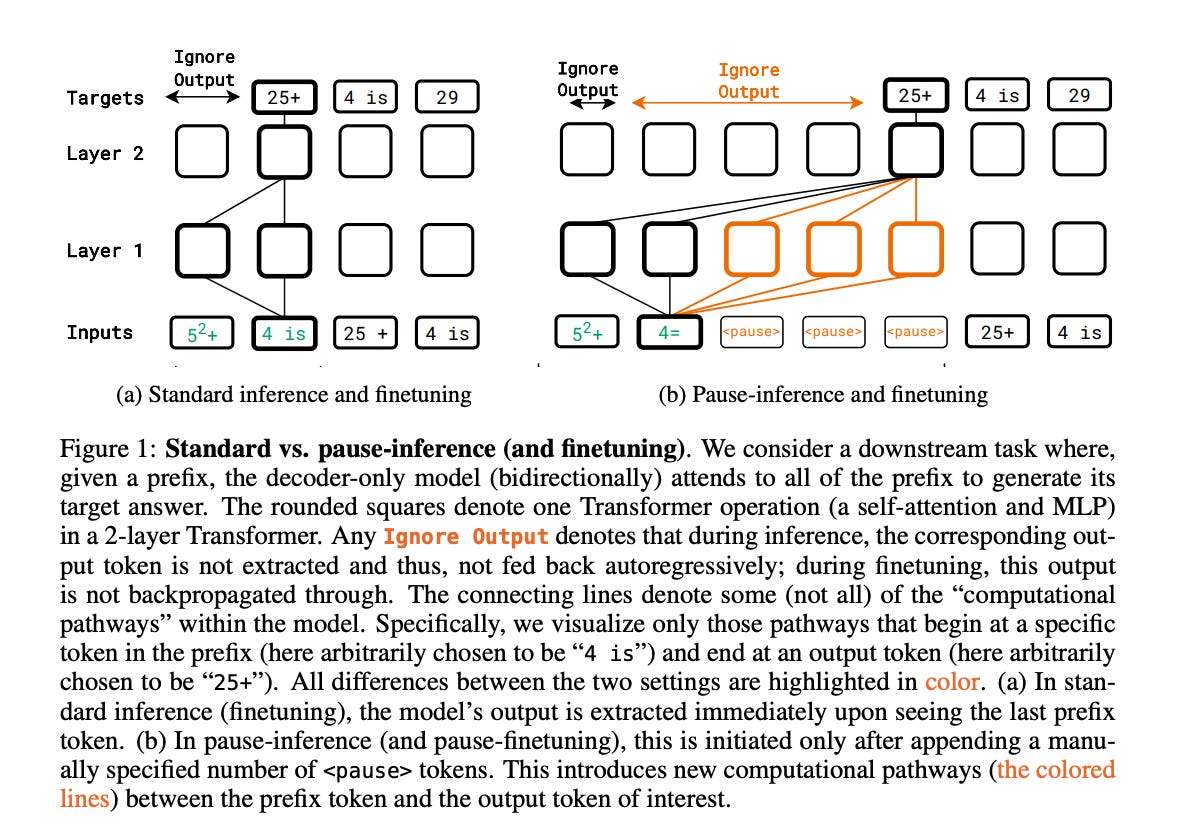

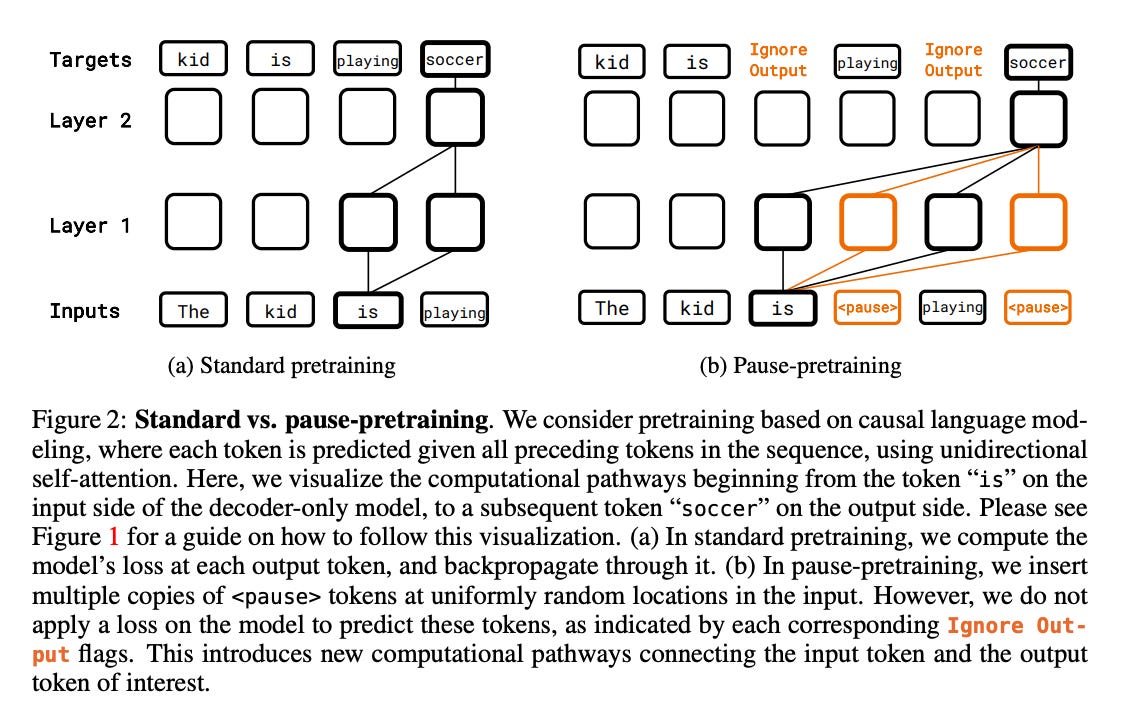

Sachin Goyal (Google) et al: Think before you speak: Training Language Models With Pause Tokens (OpenReview)

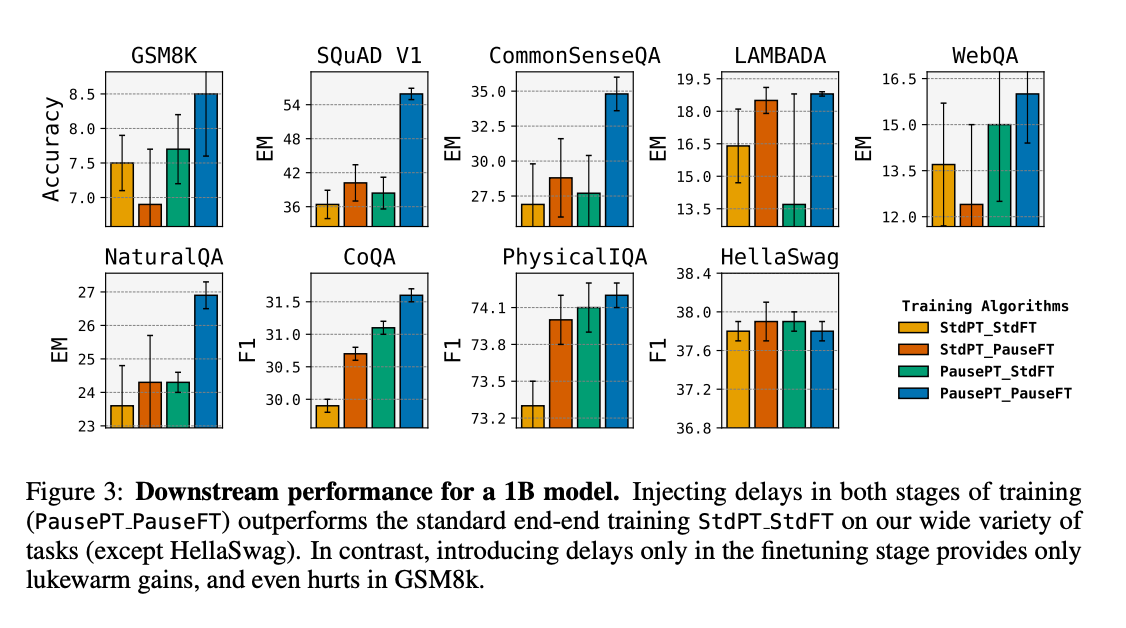

We operationalize this idea by performing training and inference on language models with a (learnable) pause token, a sequence of which is appended to the input prefix. We then delay extracting the model's outputs until the last pause token is seen, thereby allowing the model to process extra computation before committing to an answer. We empirically evaluate pause-training on decoder-only models of 1B and 130M parameters with causal pretraining on C4, and on downstream tasks covering reasoning, question-answering, general understanding and fact recall.

Our main finding is that inference-time delays show gains when the model is both pre-trained and finetuned with delays. For the 1B model, we witness gains on 8 of 9 tasks, most prominently, a gain of 18% EM score on the QA task of SQuAD, 8% on CommonSenseQA and 1% accuracy on the reasoning task of GSM8k. Our work raises a range of conceptual and practical future research questions on making delayed next-token prediction a widely applicable new paradigm.

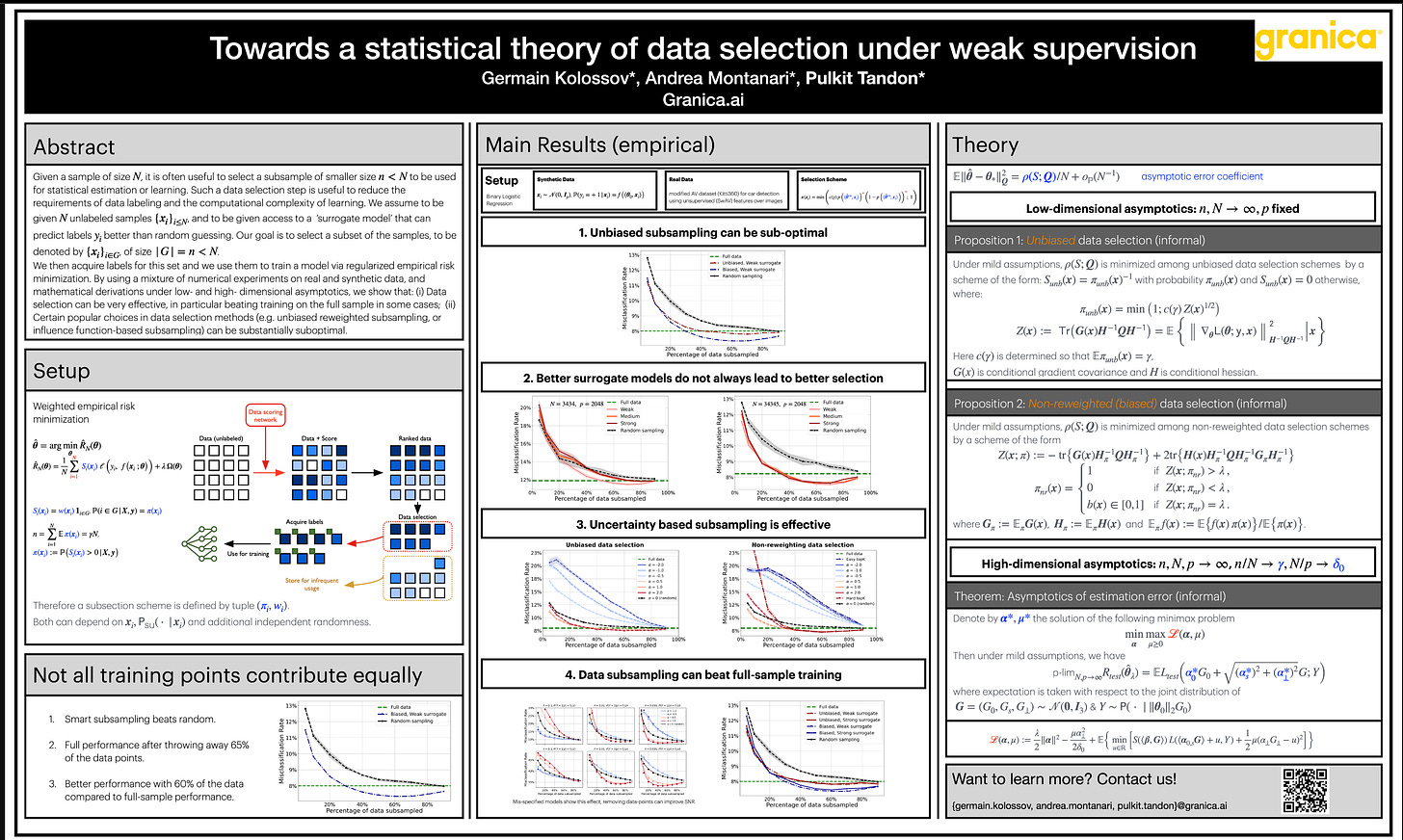

Pulkit Tandon (Granica) et al: Towards a statistical theory of data selection under weak supervision (ICLR Oral, Poster, Paper)

Honorable Mention: “The paper establishes statistical foundations for data subset selection and identifies the shortcomings of popular data selection methods.”

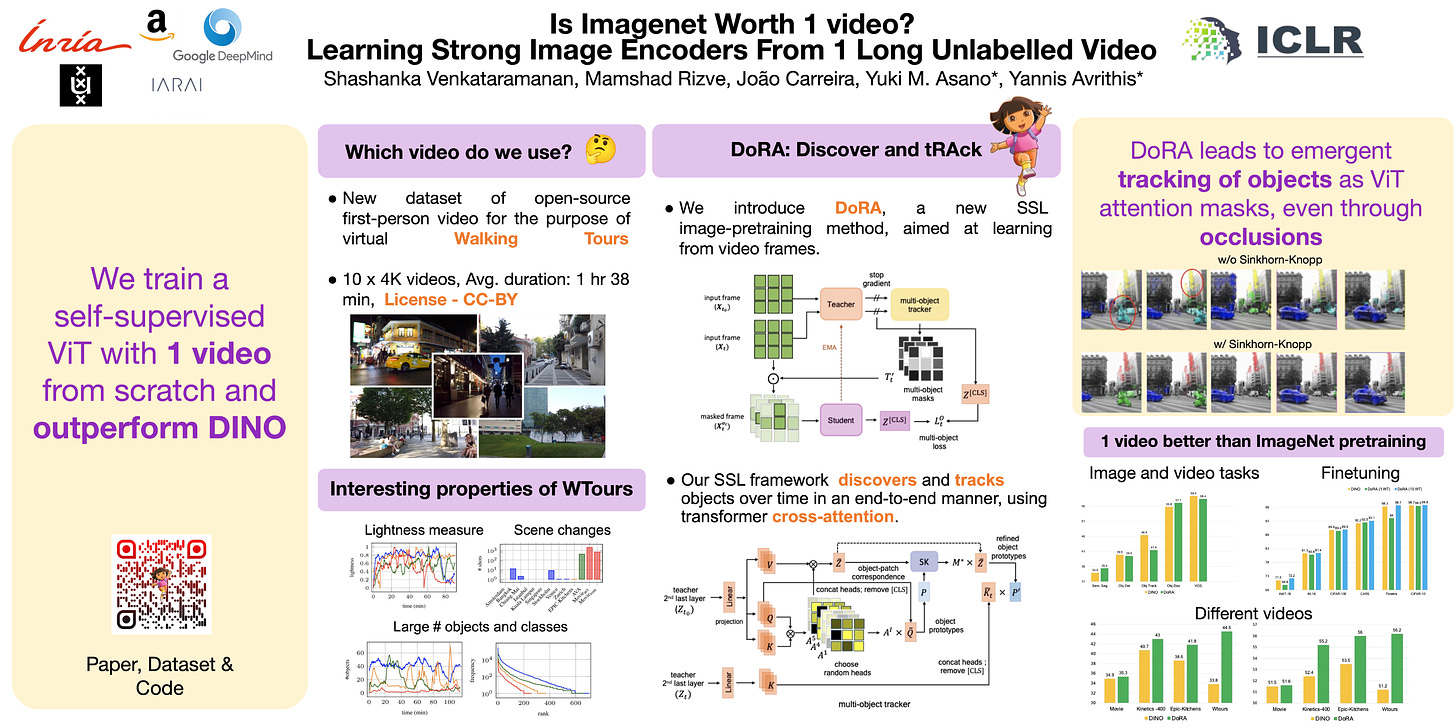

Shashank Venkataramanan (Inria) et al: Is ImageNet worth 1 video? Learning strong image encoders from 1 long unlabelled video (ICLR Oral, paper)

First, we investigate first-person videos and introduce a "Walking Tours" dataset. These videos are high-resolution, hours-long, captured in a single uninterrupted take, depicting a large number of objects and actions with natural scene transitions. They are unlabeled and uncurated, thus realistic for self-supervision and comparable with human learning.

Second, we introduce a novel self-supervised image pretraining method tailored for learning from continuous videos. Existing methods typically adapt image-based pretraining approaches to incorporate more frames. Instead, we advocate a "tracking to learn to recognize" approach. Our method called DoRA leads to attention maps that DiscOver and tRAck objects over time in an end-to-end manner, using transformer cross-attention. We derive multiple views from the tracks and use them in a classical self-supervised distillation loss. Using our novel approach, a single Walking Tours video remarkably becomes a strong competitor to ImageNet for several image and video downstream tasks.

Honorable Mention: “The paper proposes a novel path to self-supervised image pre-training, by learning from continuous videos. The paper contributes both new types of data and a method to learn from novel data.“

C: Extending Transformers and Attention

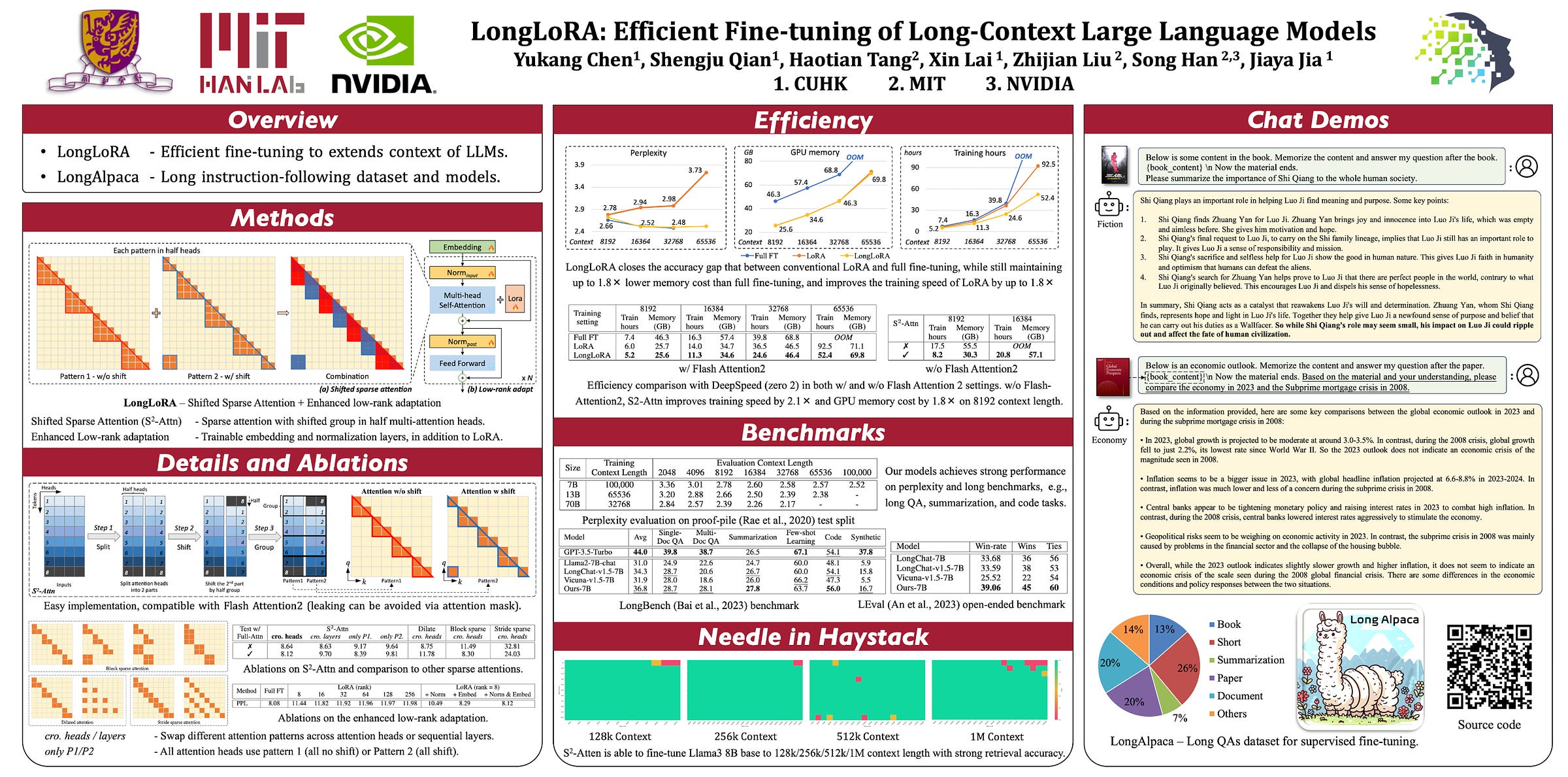

Yukang Chen (CUHK) et al: LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models (ICLR Oral, Poster)

We present LongLoRA, an efficient fine-tuning approach that extends the context sizes of pre-trained large language models (LLMs), with limited computation cost. LongLoRA extends Llama2 7B from 4k context to 100k, or Llama2 70B to 32k on a single 8x A100 machine. LongLoRA extends models' context while retaining their original architectures, and is compatible with most existing techniques, like Flash-Attention2.

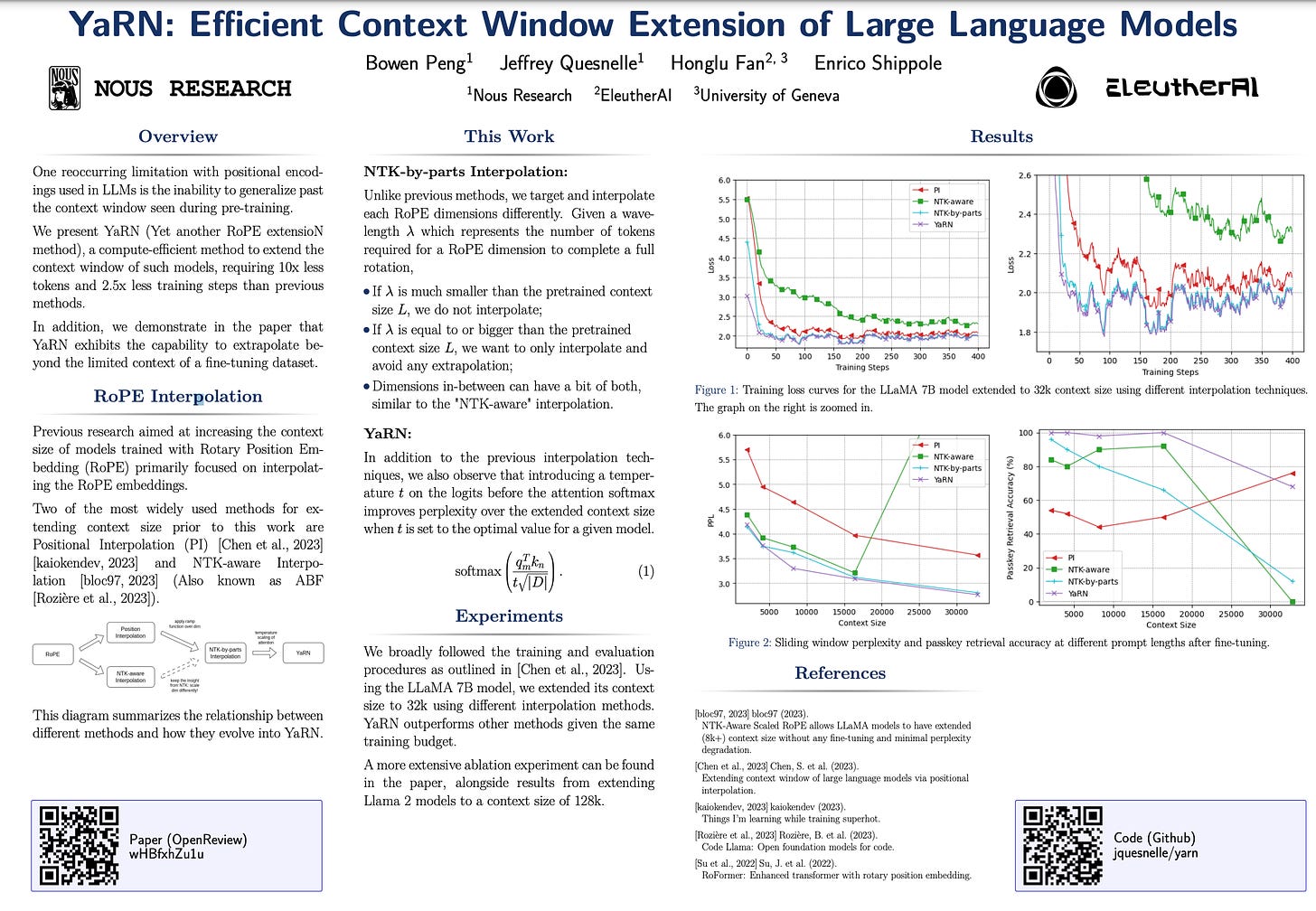

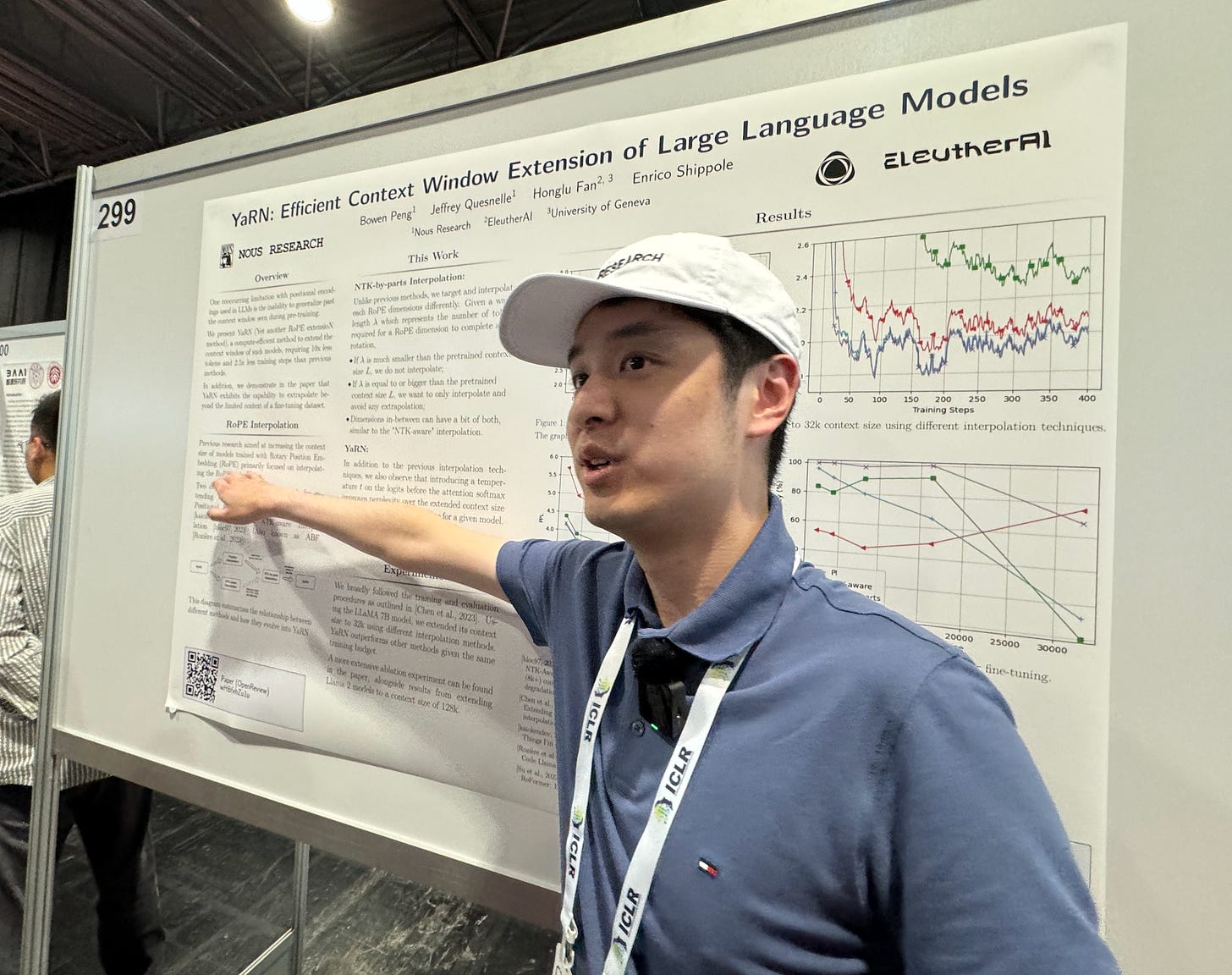

Bowen Peng (Nous Research) et al: YaRN: Efficient Context Window Extension of Large Language Models (Poster, Paper)

Rotary Position Embeddings (RoPE) have been shown to effectively encode positional information in transformer-based language models. However, these models fail to generalize past the sequence length they were trained on. We present YaRN (Yet another RoPE extensioN method), a compute-efficient method to extend the context window of such models, requiring 10x less tokens and 2.5x less training steps than previous methods. Using YaRN, we show that LLaMA models can effectively utilize and extrapolate to context lengths much longer than their original pre-training would allow, while also surpassing previous the state-of-the-art at context window extension. In addition, we demonstrate that YaRN exhibits the capability to extrapolate beyond the limited context of a fine-tuning dataset. The models fine-tuned using YaRN has been made available and reproduced online up to 128k context length.

Mentioned papers: Kaikoendev on TILs While Training SuperHOT, LongRoPE, Ring Attention, InfiniAttention, Textbooks are all you need and the Synthetic Data problem

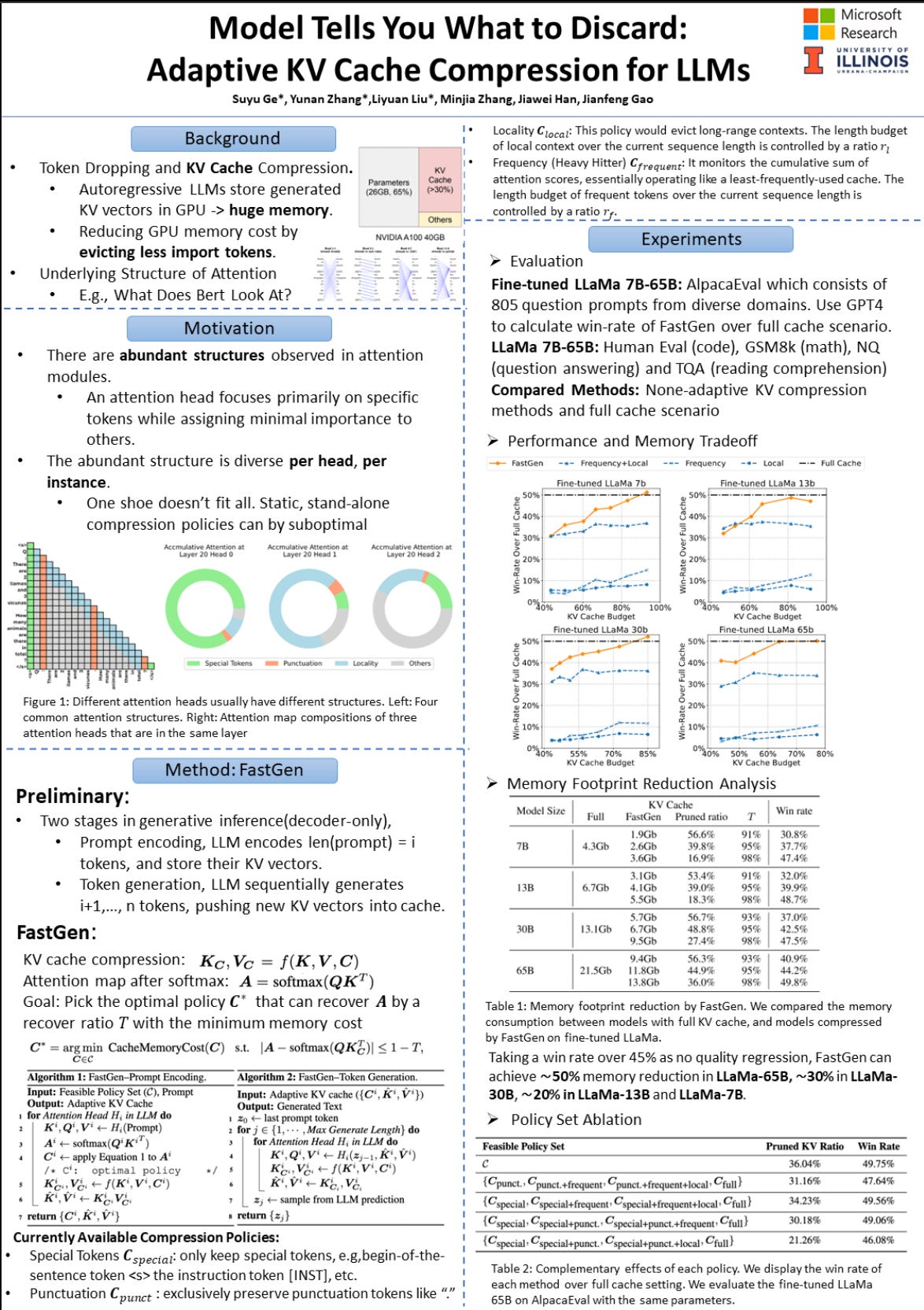

Suyu Ge et al: Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs (aka FastGen. ICLR Oral, Poster, Paper)

“We introduce adaptive KV cache compression, a plug-and-play method that reduces the memory footprint of generative inference for Large Language Models (LLMs). Different from the conventional KV cache that retains key and value vectors for all context tokens, we conduct targeted profiling to discern the intrinsic structure of attention modules. Based on the recognized structure, we then construct the KV cache in an adaptive manner: evicting long-range contexts on attention heads emphasizing local contexts, discarding non-special tokens on attention heads centered on special tokens, and only employing the standard KV cache for attention heads that broadly attend to all tokens. In our experiments across various asks, FastGen demonstrates substantial reduction on GPU memory consumption with negligible generation quality loss. ”

40% memory reduction for Llama 67b

Honorable Mention: “The paper targets the critical KV cache compression problem with great impact on transformer based LLMs, reducing the memory with a simple idea that can be deployed without resource intensive fine-tuning or re-training. The approach is quite simple and yet is shown to be quite effective.”

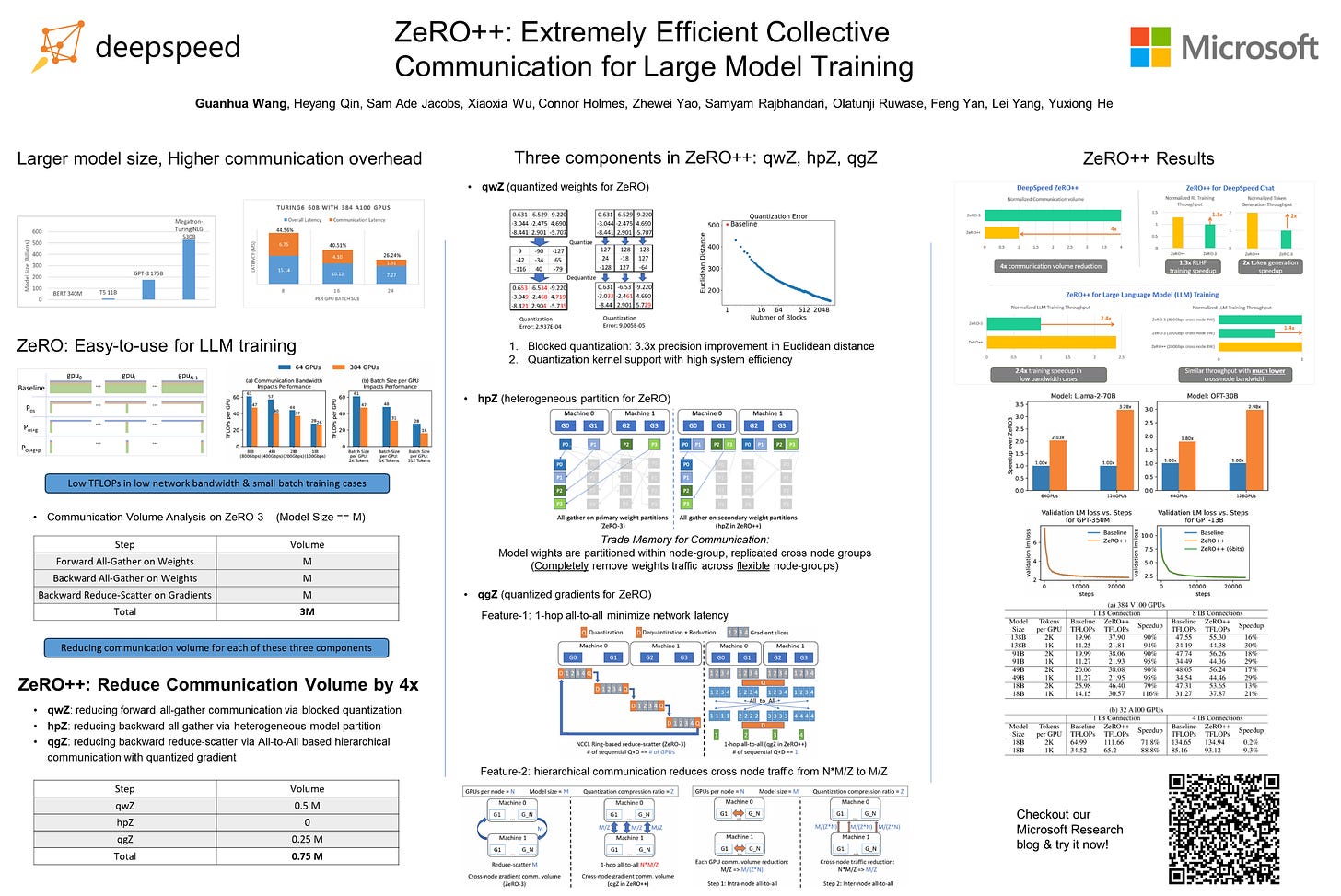

Guanhua Wang (DeepSpeed) et al, ZeRO++: Extremely Efficient Collective Communication for Giant Model Training (paper, poster, blogpost)

Zero Redundancy Optimizer (ZeRO) has been used to train a wide range of large language models on massive GPUs clusters due to its ease of use, efficiency, and good scalability. However, when training on low-bandwidth clusters, or at scale which forces batch size per GPU to be small, ZeRO's effective throughput is limited because of high communication volume from gathering weights in forward pass, backward pass, and averaging gradients. This paper introduces three communication volume reduction techniques, which we collectively refer to as ZeRO++, targeting each of the communication collectives in ZeRO.

Collectively, ZeRO++ reduces communication volume of ZeRO by 4x, enabling up to 2.16x better throughput at 384 GPU scale.

Mentioned: FSDP + QLoRA

Poster Session Picks

We ran out of airtime to include these in the podcast, but we recorded interviews with some of these authors and could share audio on request.

Summarization

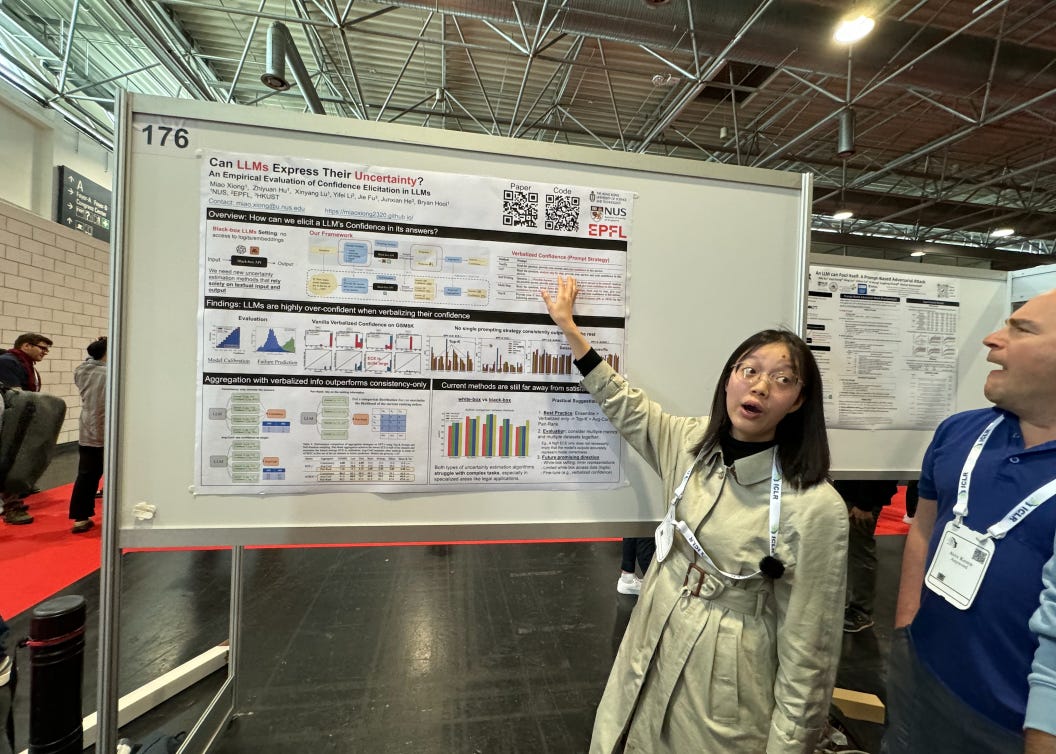

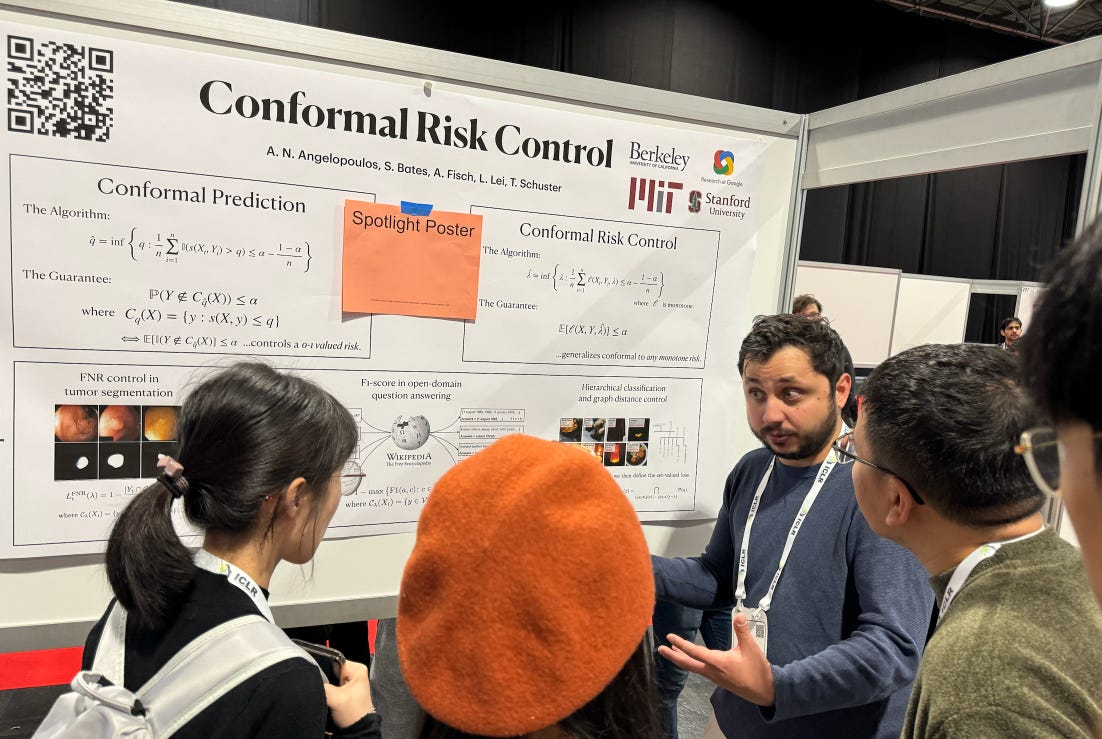

Uncertainty

Tabular Data

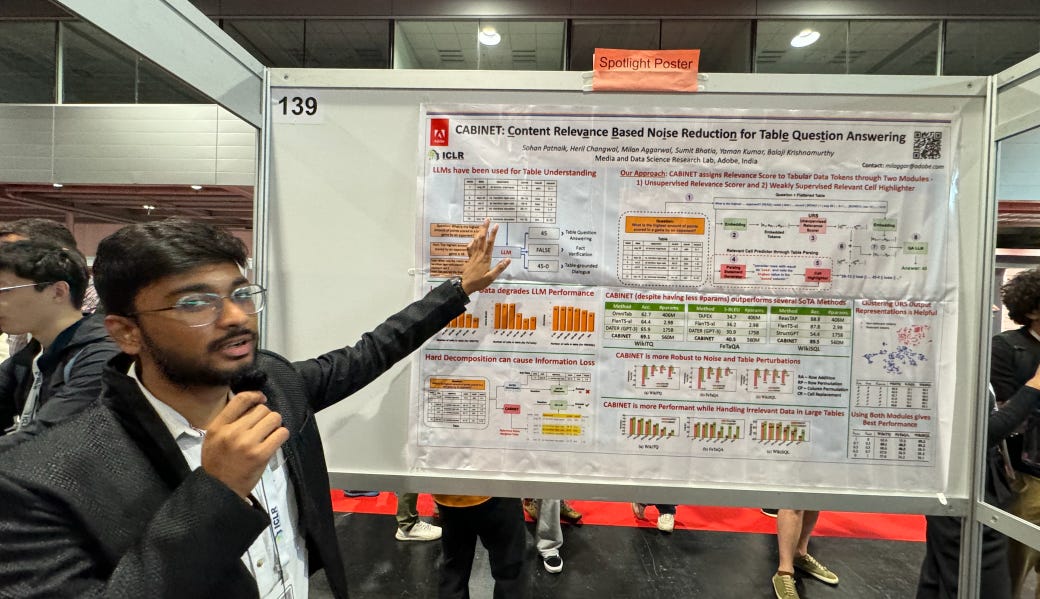

CABINET: Content Relevance-based Noise Reduction for Table Question Answering

Mixed-Type Tabular Data Synthesis with Score-based Diffusion in Latent Space

Making Pre-trained Language Models Great on Tabular Prediction

How Realistic Is Your Synthetic Data? Constraining Deep Generative Models for Tabular Data

Watermarking (there were >24 papers on watermarking, both for and against!!)

Misc

D: State Space Models vs Transformers

Sasha Rush’s State Space Models ICLR invited talk on workshop day

Ido Amos (IBM) et al: Never Train from Scratch: Fair Comparison of Long-Sequence Models Requires Data-Driven Priors (ICLR Oral)

Modeling long-range dependencies across sequences is a longstanding goal in machine learning and has led to architectures, such as state space models, that dramatically outperform Transformers on long sequences.

However, these impressive empirical gains have been by and large demonstrated on benchmarks (e.g. Long Range Arena), where models are randomly initialized and trained to predict a target label from an input sequence. In this work, we show that random initialization leads to gross overestimation of the differences between architectures.

In stark contrast to prior works, we find vanilla Transformers to match the performance of S4 on Long Range Arena when properly pretrained, and we improve the best reported results of SSMs on the PathX-256 task by 20 absolute points.

Subsequently, we analyze the utility of previously-proposed structured parameterizations for SSMs and show they become mostly redundant in the presence of data-driven initialization obtained through pretraining. Our work shows that, when evaluating different architectures on supervised tasks, incorporation of data-driven priors via pretraining is essential for reliable performance estimation, and can be done efficiently.

Outstanding Paper Award: “This paper dives deep into understanding the ability of recently proposed state-space models and transformer architectures to model long-term sequential dependencies. Surprisingly, the authors find that training transformer models from scratch leads to an under-estimation of their performance and demonstrates dramatic gains can be achieved with a pre-training and fine-tuning setup. The paper is exceptionally well executed and exemplary in its focus on simplicity and systematic insights.”

If you need a bigger discount to make it work - use coupon AINEWS.

77 afleveringen